This is the eleventh post in our Mixmax Advent series and the first part of an ongoing series documenting the evolution of Mixmax’s architecture and infrastructure over time. We’re a long way from where we started way back in 2014! In this post, we’ll cover the first two years of Mixmax history.

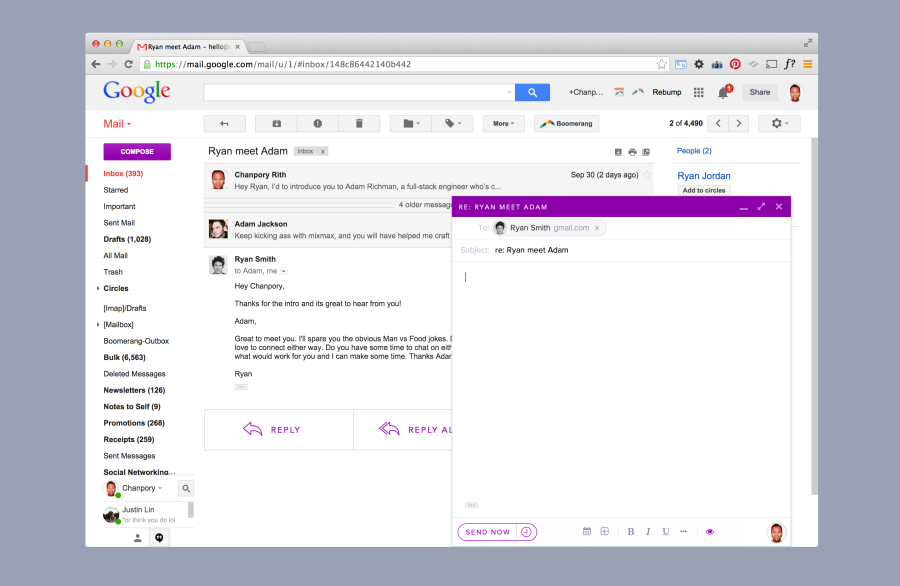

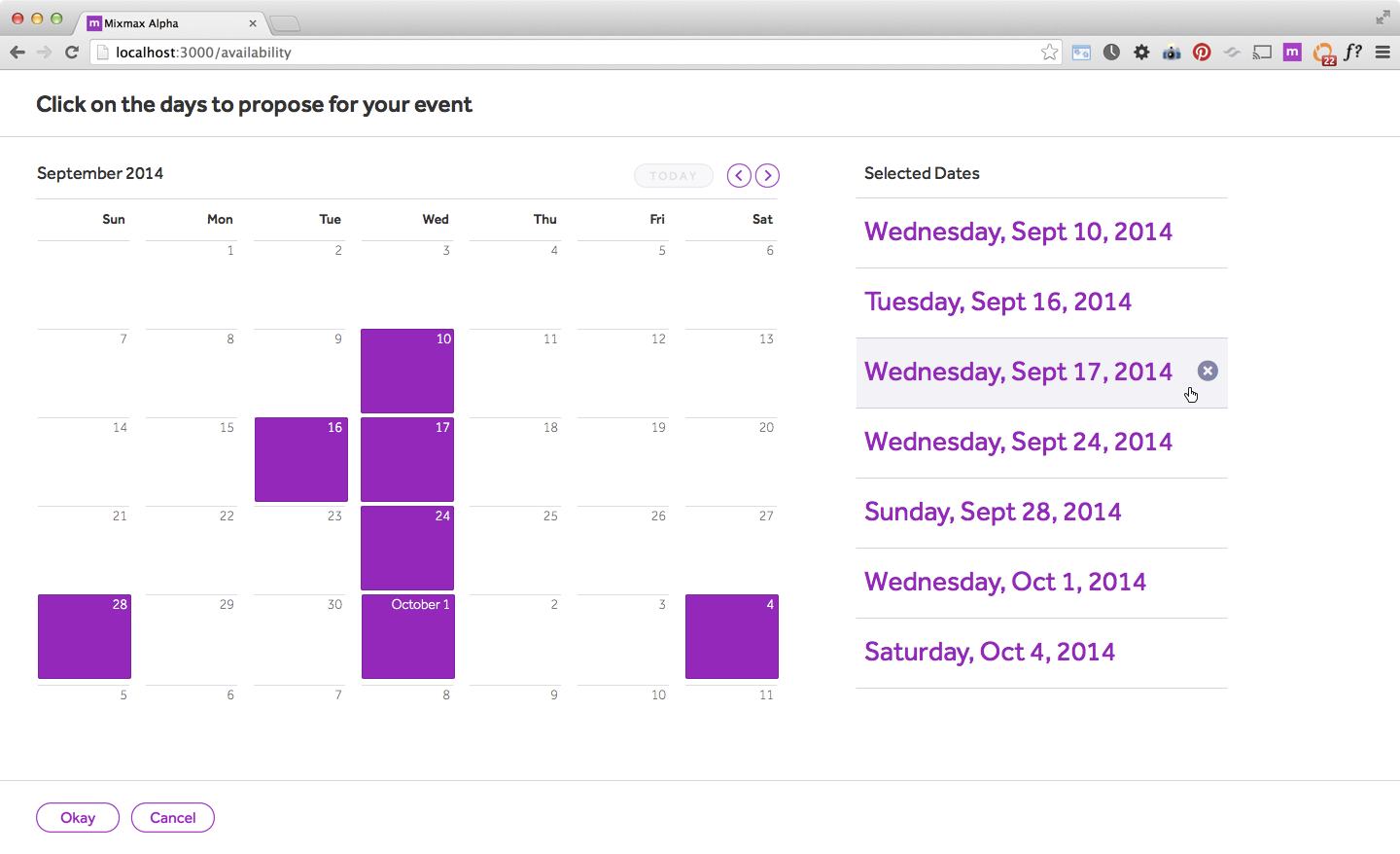

Mixmax started in mid-2014 as a prototype of a web-based email editor. The idea was that you could embed “smart enhancements” into your email, such as an interactive slideshow or meeting links picked from your Google Calendar availability. The content could then be sent to someone or shared via a URL. Even the company name, “Mixmax”, was chosen to suggest that you could “mix” your content and “maximize” its impact.

Over the years, we’ve needed to constantly evolve our infrastructure and technical choices to keep up with growing demand. Mixmax has gone from a prototype Chrome extension with just a few users to a platform that tens of thousands of professionals use daily to do their job. Check out these old screenshots of baby Mixmax!

The original Mixmax prototype was written in a Javascript framework called MeteorJS. We chose Meteor because it was, at the time, the go-to framework choice to build and host a simple web app. Meteor, in turn, had chosen to bundle Node and Mongo, because they, too, were very popular in 2014. 😉

Choosing Meteor allowed us to focus exclusively on feature development in the first year of the company and thus get to product-market-fit quickly. It enabled us to deliver a polished experience at a small scale. Its core “reactivity” feature helped us make an app that was realtime, which continues to be a core part of our product experience today. It also made it easy to share code between the client and server (“isomorphic code”), which shortened development time for us, as a lot of the prototype’s code ran on both server and client.

From that original Meteor prototype to where we are today, our architecture history can be roughly summarized as breaking apart our original “Meteor monolith” into a new microservice architecture. The below timeline explains each step and why we made the technology choices we did.

Timeline

Jul 2014: Our first prototype was built using Meteor and hosted on Modulus.io. We chose Modulus because it was the only PaaS that supported single-command Meteor app deployment (not even Heroku did). Our data was stored in MongoDB hosted with Compose.io, on the recommendation of the Meteor team (since Meteor and Compose were both in the same YC class). We used Codeship for deployment because they were the easiest to get set up at the time.

Sep 2014: We rewrote our first prototype into something a bit less hacky, and took the opportunity to make the code more modular so that we could start pulling parts of it out into their own services. We knew at this point that Meteor wasn’t going to scale as a monolith, as we had many outages due to server CPU saturation.

Feb 2015: We started to break apart the Meteor monolith by moving our contact management code into its own service. Next we split out services for insertable email apps (to better integrate 3rd-party npm modules) and send email (for stability). We decided to continue to use Node because we had team Javascript proficiency and it was much easier to port code from the prototype (ie we didn’t need to rewrite it in another language). For async operations, we decided to continue using Fibers (via a wrapper library called synchronize) from Meteor. Meteor used Fibers to make isomorphic Javascript more readable. These decisions allowed us to mostly copy-and-paste code from the Meteor monolith codebase, without needing to rewrite using Promises. We also decided to stick with Mongo since the schema-less design allowed us to develop more quickly. To send email, we used a distributed queue called Kue, which used Redis as a broker. We made this choice due to our experience with the tools, and because it was easy to host Redis deployments on Compose.io, where we were already hosting Mongo.

May 2015: The next code to be pulled out of the Meteor monolith was the email editor. This solved a big performance complaint from customers that Mixmax was too slow to load in Gmail (because Meteor couldn’t render server-side). We chose to implement the frontend in Backbone because we had team expertise in it. Backbone also uniquely supported attaching views to server-side rendered content which other frameworks - including Angular and ReactJS - did not support this at the time. We even built a small library to add reactivity support to Backbone, so it felt more like a Meteor app.

Aug 2015: We created a “notifications service” to abstract publishing data to our Redis. Down the line, this would end up powering Mixmax’s rules engine, which at the time was but a twinkle in our eye.

Oct 2015: We moved most of the microservices from modulus.io to AWS Elastic Beanstalk. This move was mostly because modulus.io didn’t offer enough monitoring capability. The main webapp stayed on modulus.io for websocket support.

Dec 2015: We moved the main webapp from Modulus to Meteor Galaxy. Galaxy had just launched and we were building a relationship with the Meteor team (as their biggest app!), so it made sense to just host with them. This moved us entirely off modulus.io.

Feb 2016: We noticed emails weren’t being sent sometimes and realized our distributed queue, Kue, was losing job data (!) and therefore users’ emails (!!!). We searched for alternatives and found one we liked, a queue called Bull. We switched all our backend queues to Bull, which solved the immediate data loss issue.

Jun 2016: While on a team offsite, literally in a car, we implemented Elasticsearch as the backing store for an inbox history feature called the Live Feed, served from a new microservice. We initially hosted Elasticsearch using Compose.io, but quickly moved it to Elastic Cloud, since Compose.io had stability issues.

...and that’s the first two years of Mixmax! These days, it’s hard to believe it took us so long to put anything on AWS, but there you have it: it was two years of rapid iteration, PaaS migrations, and growing a business from scratch. In the next installment, we’ll cover how Mixmax matured in its new post-monolith era!