This is the tenth post of twelve for Mixmax Advent 2020.

What is a Star Schema?

Have you ever had to build a schema for a Data Warehouse and had no idea where to start?

Maybe you've tried downloading the data from its source systems and put them into different tables per each entity. That's a good start, but then you have to make sure the data can be easy to use for end users. One way to do it is to create easier to use tables each time a new requirement comes, known as "report-driven development".

This method is quite problematic, for many reasons, including:

- Frequent updates are needed for the data to work correctly (e.g. cleanups, relationships, business logic, etc.) and have to be replicated each time you reuse the data. Plus any changes to those definitions will have to be applied to all reports using the same data if you want to avoid conflicts between reports

- You'll rework the same data many times because you'll have to reapply the cleanups, business logic, etc which applies to the source data each time.

- Your power users will need to learn each report's data separately, instead of learning some set of reusable tables they can join and create new reports

- It might be difficult to come up with a model that allows you to make it easy for the users to use the report while at the same time being able to explore the data

Instead, we chose to go with a Kimball-style Star Schema model, with some alterations. If you are unfamiliar with Ralph Kimball, he and his team are legends in the Data space, they wrote some of the best books on Data Warehousing and Business Intelligence (Which basically used to be the cool names for Data Engineering and Analysis 😉 ).

Essentially, with a star schema you create fact and dimension tables, the facts describing business processes (Customer ordered X - Item moved to Y - Feature Z used by customer - etc), and the dimensions describing the entities that perform those processes (Customers, Items, Stores, etc).

One of the requirements for something to be modeled as a dimension is that it changes slowly, Kimball calls them "Slowly Changing Dimensions" (SCD). e.g. Something that changes every 5 minutes would not normally be modeled as a dimension, or be included as a dimension attribute.

Example Star Schema

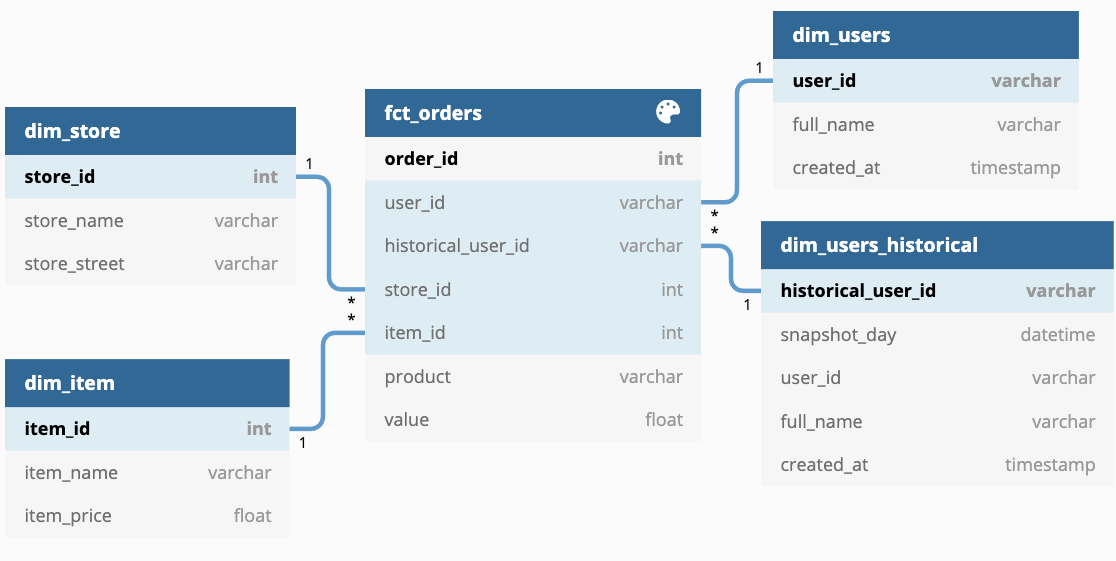

In this example, we have a table called fct_orders (Fact table of Orders), where each row (the grain) is an order made by a user, in which we have ids for the user who made the order, the item which was ordered and from which store.

Then there are dimensions for each of these objects, this way each of these ids can be joined to obtain the attributes. This has a couple of advantages:

- Any adjustments made to the dimensions to improve accuracy will affect all reports that use these dimensions

- It will be easier to develop new fact tables without having to think about all the attributes you may want to relate to this data, just add the relevant ids to your fact table and voilà! - Down the line this will save countless hours in new projects

- You’ll only need to document fields once in the place where you created them initially, without having to re-document them for every new report you create

- It’s easier to create scalable loading processes for star schemas than other kinds of modeling

Avoiding Normalization and Snowflaking

An important consideration is that this is very different from a normalized schema, where you try to avoid data duplication and is optimized for production usage. This type of schema is optimized for reporting instead, and is in fact “denormalized”, in our example above, suppose a user can have an associated company, and more than one user can be from the same company (Similar to how a workspace in Mixmax can allow many users to collaborate together).

In that case, instead of linking the users dimension with a new company dimension, we may add the “company_id” to “fct_orders”, and/or, directly add the relevant company attributes into the users dimension. Which solution to use depends a lot on your particular scenario. Either of these solutions avoids “Snowflaking”, which would mean that you are not using a Star schema, but a Snowflake schema.

Alterations on Kimball’s advice

All this said, some of the advice from Kimball is based on performance and tooling limitations of their time, we have some notable differences:

- We only do surrogate keys if we really need them, and we do it by creating a hash id as recommended in the DBT documentation. A surrogate key is an artificial key you create in the DWH instead of using the natural keys from the data source.

- We are a little bit less strict on the inclusion of measurements in dimensions, we allow some of them to be included if they are helpful

- We try to keep "Snowflaking" at a minimum (Joining two dimensions to each other) but we are not as strict as Kimball here, sometimes we allow it at least temporarily if we need to get something done quick

Related to this, we create the star schema using DBT, a modern open-source ETL tool which allows us to run SQL queries in an organized way for creating our data model in our Snowflake Data Warehouse. We run everything with machines on AWS Fargate, and use Airflow to coordinate dbt and non-dbt tasks together harmoniously.

Opinion of this model from our Data Analyst

Facts, dimensions and measures oh my! As a data analyst, working with a star schema has been life changing. In roles at other companies I worked with data warehouses that lacked a true schema. It was difficult to find fields, identify joins and know which of the 10 fields named the same was the right one. Then walks in Mixmax’s organized data warehouse that allows for easy data modeling (we use dbt!) with simple joins to get the data necessary to move forward.

It has made onboarding onto a new Business Intelligence (BI) tool a breeze. Earlier this year we purchased Looker as our BI tool, within just a few weeks we were able to accurately report on revenue reporting in Looker. Much of this is due to ease of the data model and the ability to join multiple data sources with simple keys. We were able to easily expose facts tables with the needed dimension tables to get our customers what they needed quickly.

A solid data warehouse structure will not only benefit Data Engineers, but will also allow Data Analysts to easily iterate, make changes and create new data assets. In addition, your stakeholders will enjoy the ease of use, short time to answer questions, and the ability to deliver value quicker.