Today we're announcing the v1.0 release of Bee-Queue: a fast, lightweight, robust Redis-backed job queue for Node.js. With the help of Bee-Queue's original author, Lewis Ellis, we revived the project to make it the fastest and most robust Redis-based distributed queue in the Node.js ecosystem. Mixmax is using Bee-Queue in production to process tens of millions of jobs each day.

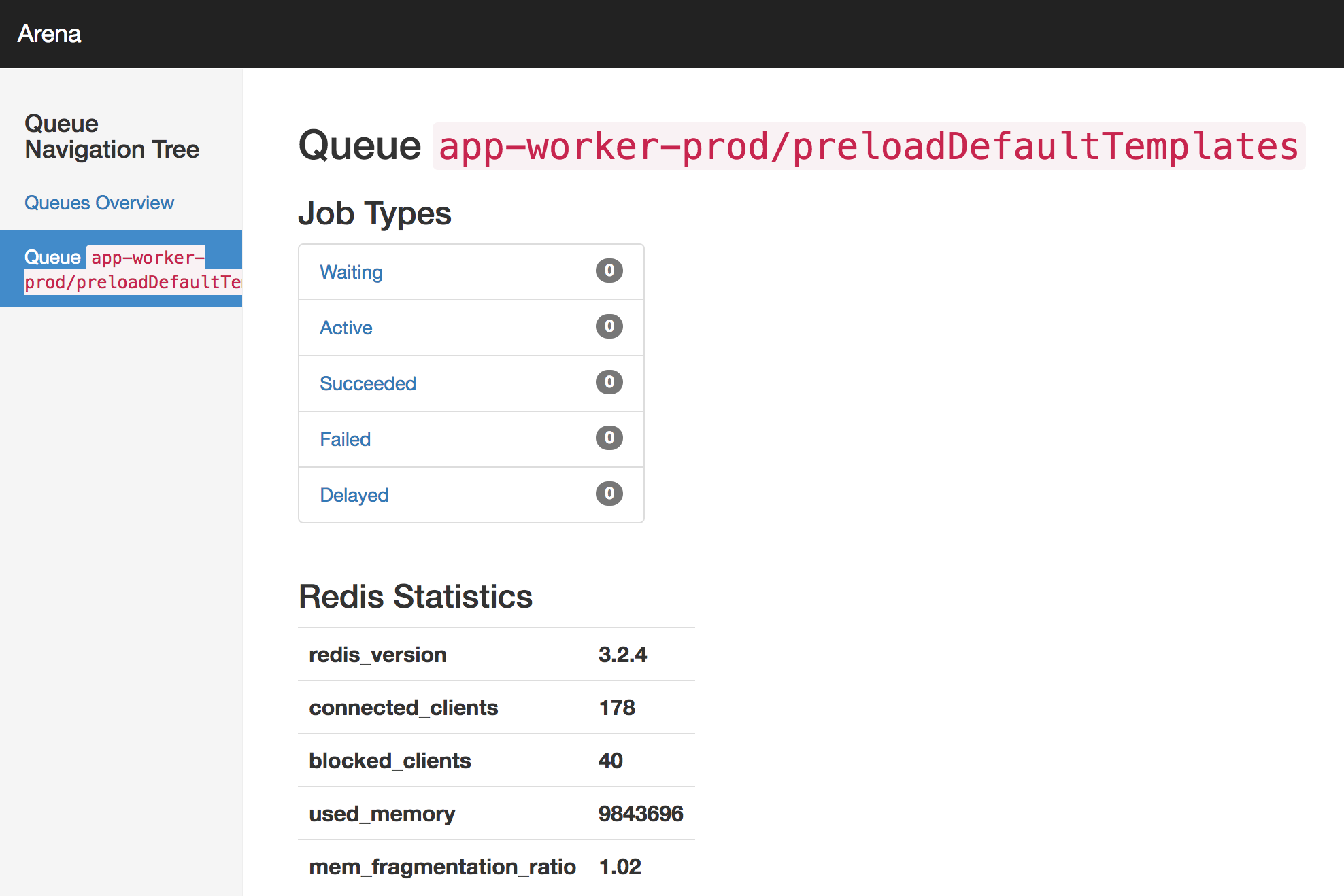

Bee-Queue is meant to power a distributed worker pool and was built with short, real-time jobs in mind. Scaling is as simple as running more workers, and Bee-Queue even has a useful interactive dashboard that we use to visually monitor job processing.

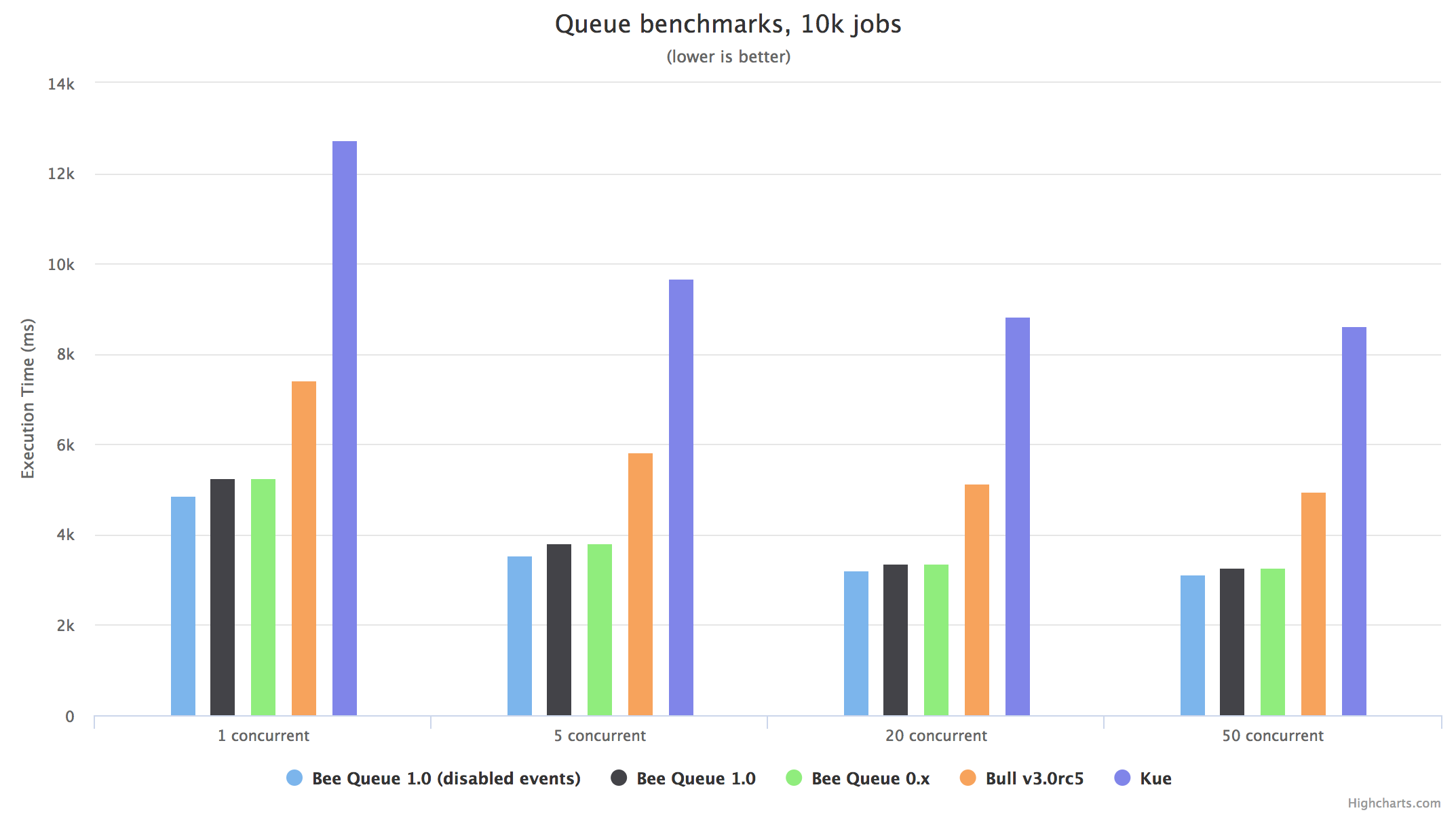

Here is how Bee-Queue v1.0 compares to other Redis-based job queues in the Node ecosystem (including its own prior v0.x release):

Why Bee-Queue?

So, why another job queue in the Node.js ecosystem? Up until now, Mixmax had been using a similar queue, Bull. Bull (v2) served us well for a year, but there was a race condition that resulted in some jobs being double processed, causing major problems at scale. That race condition was fixed by re-architecting the job processing mechanism, which is currently being released as Bull v3. Unfortunately, Bull v3 also experienced a significant performance regression over Bull v1 and v2. We were faced with the decision to either fix the performance regression in Bull v3, move entirely to a different queue such as RabbitMQ, or start over and write our own simple high-performance Redis-based queue.

We started our investigation by looking into what it'd take to fix the performance regression in Bull v3, but we quickly realized that it would have been a huge effort. Fixing the performance regression while maintaining its rich set of features (many of which we weren’t using) would have taken many weeks of work. Since Redis CPU was our primary concern, we needed to minimize the number of Redis calls to process each job, ideally down to our theoretical minimum of 3. We also felt a lack of goal alignment with the project: Bull is feature-rich and generally designed for long-running jobs. We were processing millions of jobs in a small amount of time and needed to keep performance & stability as the top priority.

We wanted to hold off on using RabbitMQ; we didn’t want to take on the devops burden of hosting it ourselves (by comparison, we host Redis using very-stable internal-to-our-VPC AWS Elasticache). We could have also used a third party host, but that meant all our high-volume event processing traffic would be leaving our network. We use Redis extensively at Mixmax and have team core competency in it, so we wanted to stick with a Redis-based queue. Additionally, we wanted to minimize the time we spent on this project, and moving to an entirely different framework and technology would have meant significant engineering work before we would feel comfortable relying on it in production.

That left a third option: building our own lightweight queue based on Redis that did only what we needed it to, in order to make performance a priority. Before starting the project from scratch, we rediscovered Bee-Queue, having first seen it a couple years ago. We evaluated its codebase again and quickly realized that it’d be a great platform to build upon. Building on an existing queue saved us weeks of implementation time; we refreshed the codebase, identified the missing features we needed, and added those with the help of the original author, Lewis.

Successful launch

We released Bee-Queue v1.0 this week and have switched over to using it entirely instead of Bull. All our production traffic is now flowing over Bee-Queue and we haven’t seen any problems. Resource usage has declined dramatically, measured by lower Node.js and Redis CPU. We were also able to repurpose existing tooling from our Bull days to work with Bee-Queue. Building up Bee-Queue to serve our needs ended up taking the least amount of time, since we didn’t need to rewrite any application code or even change our existing monitoring infrastructure, which was already built to sit on top of Redis.

Bee-Queue is now hosted in a new Github organization that we co-maintain with Lewis. We encourage you to check it out and contribute, or if you're looking for a more fully-featured queue, check out Bull. Our goal with Bee-Queue is to keep it small with performance and stability being the top priorities.

Special thanks to Lewis Ellis for editing this post.

Enjoy solving big problems like these and contributing to the open source ecosystem? Come join us.