Here at Mixmax it’s no secret that we use Mongo for a lot of different things. One issue that we’d had for a long time though was our ability to monitor our Mongo clusters for slow queries. This is an issue because if an engineer adds a new query to our codebase, but that query doesn’t use an index, trouble will almost always ensure - IOPS will go through the roof, we’ll start seeing Mongo churn through its internal cache because now it’s having to scan full collections, just a whole collection of really bad things.

We knew we could try monitoring the slow query log for this, but we didn’t like the idea, nor the fragility of monitoring a continuous stream (we didn’t have the best experience tailing Mongo’s oplog for reactivity in Meteor). Instead, we figured we’d be able to catch the worst culprits simply by periodically querying which queries had been running for a long time. To do this, we wrote, and open-sourced, mongo-slow-queries.

Initializing mongo-slow-queries

Initializing mongo-slow-queries is as simple as giving it a Mongo DB reference to use to query.

const mongojs = require('mongojs');

const MongoSlowQueryChecker = require('mongo-slow-queries');

let db = mongojs('mongodb://localhost:27017/my-db');

let slowQueries = new MongoSlowQueryChecker({ db });By default, the mongo-slow-query checker will classify a slow query as anything that is currently running and has already been running for more than five seconds. To set this to a different threshold, provide the queryThreshold parameter then initializing a new instance:

let slowQueries = new MongoSlowQueryChecker({

db,

queryThreshold: 2 // The unit is seconds.

});Using mongo-slow-queries

Once you’ve created an instance to check for slow queries with, usage is simply:

slowQueries.get((err, queries) => {

if (err) {

console.log('Failed to retrieve slow queries: ' + err);

} else {

console.log('slow queries: ' + JSON.stringify(queries));

}

});Notes on the returned queries

To make aggregating slow queries easier, we fingerprint every query that we tag as slow. This means we remove the unique components of a query, while tagging what fields we’re filtering on. As an example, the following query:

{

_id: 'foo',

$or: [{

createdAt: {

otherIds: ['bar']

}

}]

}Would have a fingerprint of:

{ _id, $or: [ { createdAt: { otherIds: [ ] } } ] }We also tag queries with other pertinent information such as what query the collection is primarily running against and if the query is using an index or not.

How we use mongo-slow-queries @ Mixmax

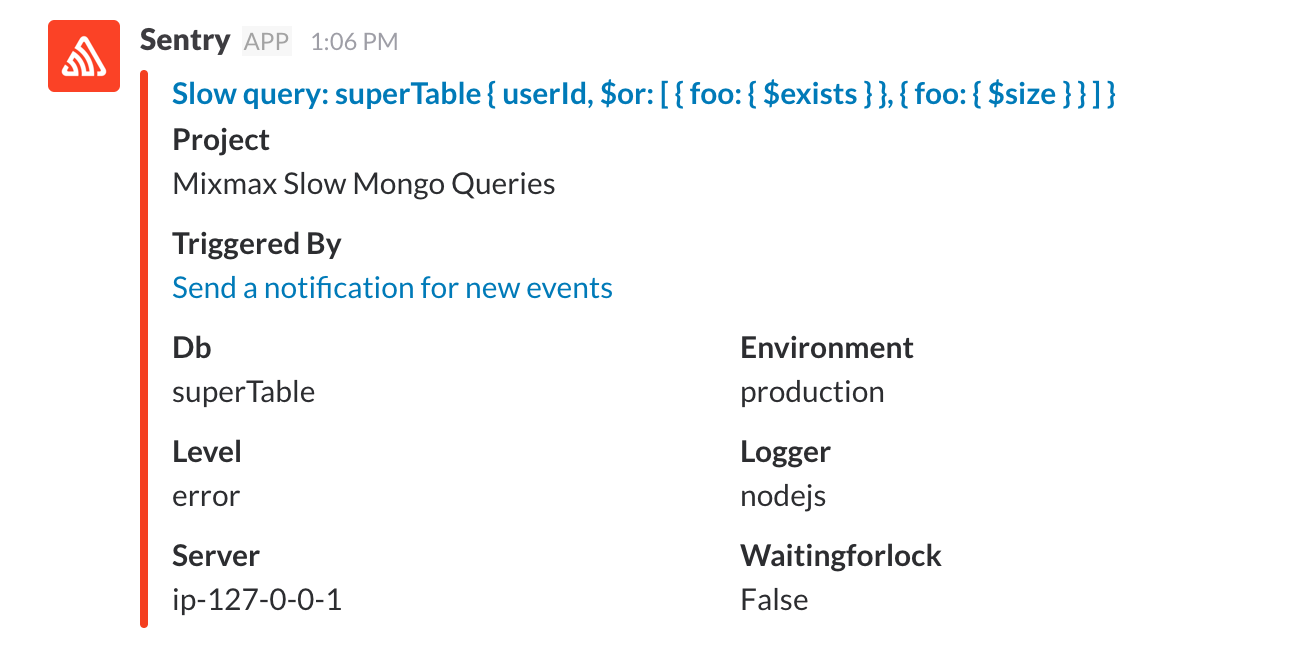

At Mixmax, we run these queries via a distributed cron job. These means we’re able to constantly query for slow queries, without tailing or hammering our DB. We then pipe any and all slow queries that are found to Sentry, our error reporting system, where we roll them up by fingerprint and then post to a Slack channel. This means we get alerts for any new slow queries, allowing us to take action immediately (as seen below).

Enjoy building next-gen monitoring to be able to predict infrastructural issues? Drop us a line.